LEARNING SOUND EVENTS FROM WEBLY LABELED DATA

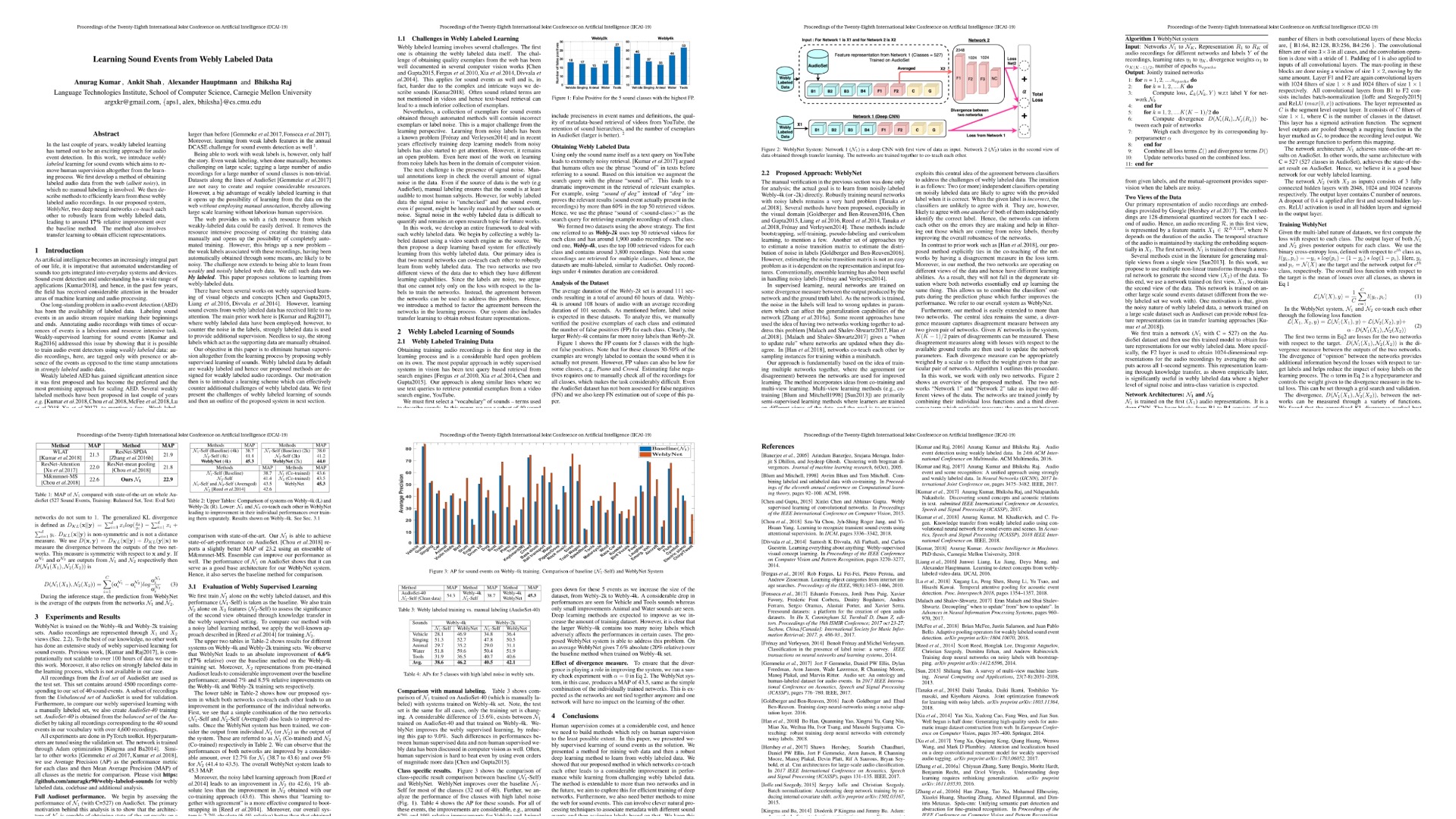

Multiple views of the data. Second view learned through transfer learning.

The two networks co-teach each other. This is done by measuring the (dis)agreement between them.

|

|

|

|

|

Abstract

In last couple of years, weakly labeled learning has turned out to be an exciting approach for audio event detection. In this work, we introduce webly labeled learning for sound events in which no manual annotations are used in the learning process. We first develop a webly labeled dataset where labels for the audio recordings are directly obtained from the web and no manual labeling is employed in the learning process. We then describe methods for learning from this webly data. Specifically, our proposed method consists of co-training two deep neural networks to address the problems associated with webly labeled data. Our proposed method also involves features learning from a large scale pre-trained network as well. We present an in-depth analysis of our method including comparison with a manually labeled data as well. Our dataset is publicly available for use by other researchers for future use.

WeblyNet System Design

This paper is about learning from web data without involving human supervision in the process.

Webly Labeled Data

Click Here For More Details Webly-2k and Webly-4k datasets.

WeblyNet

Results

Some Additional Results and Analysis

Class specific results and some additional discussion is available below.

Click Here For More Details and Results

Paper

|

Citation |

Contact

For questions/comments, contact Ankit Shah, Anurag Kumar